Find PII and Inappropriate Content in your organization's file stores

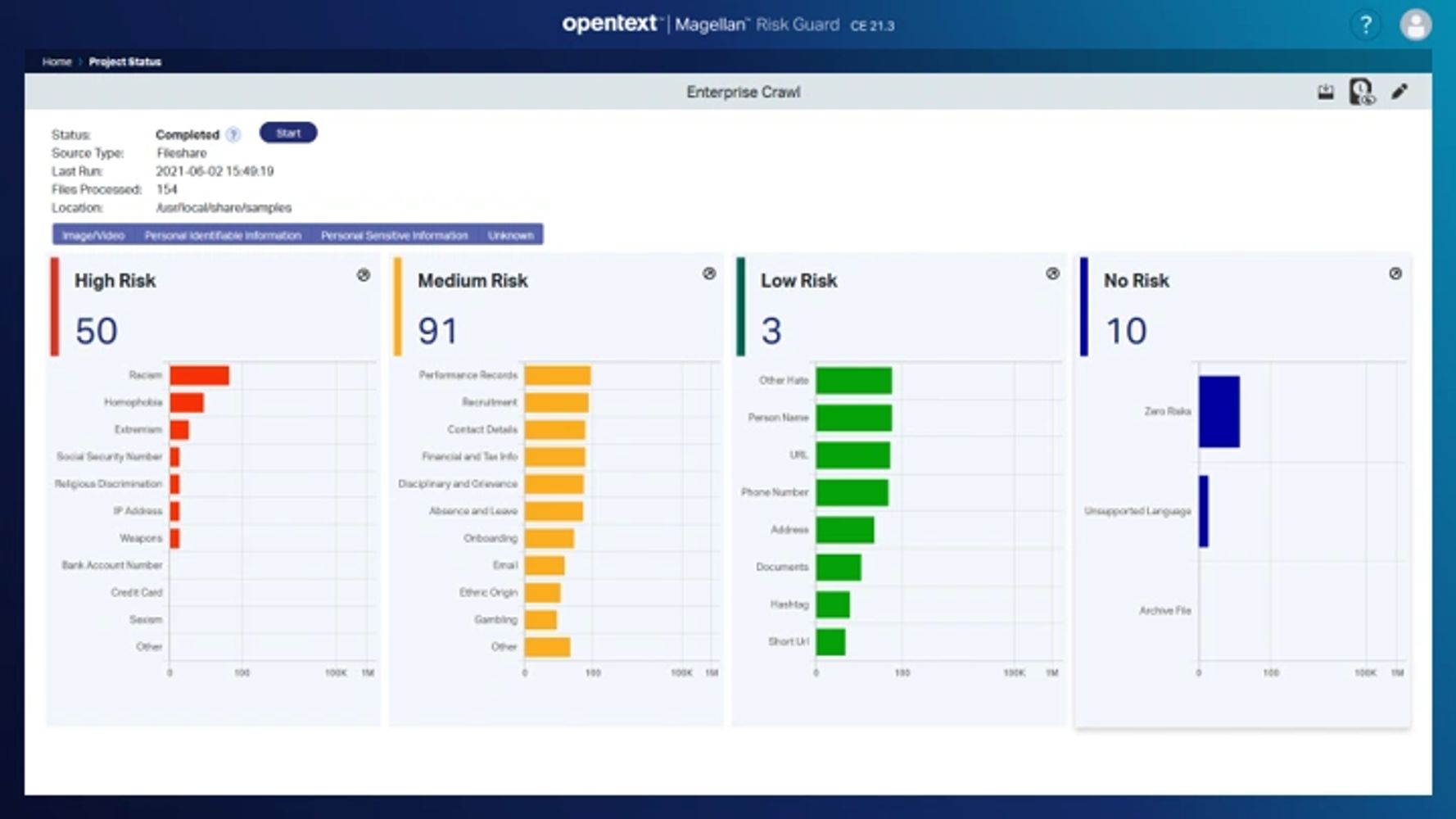

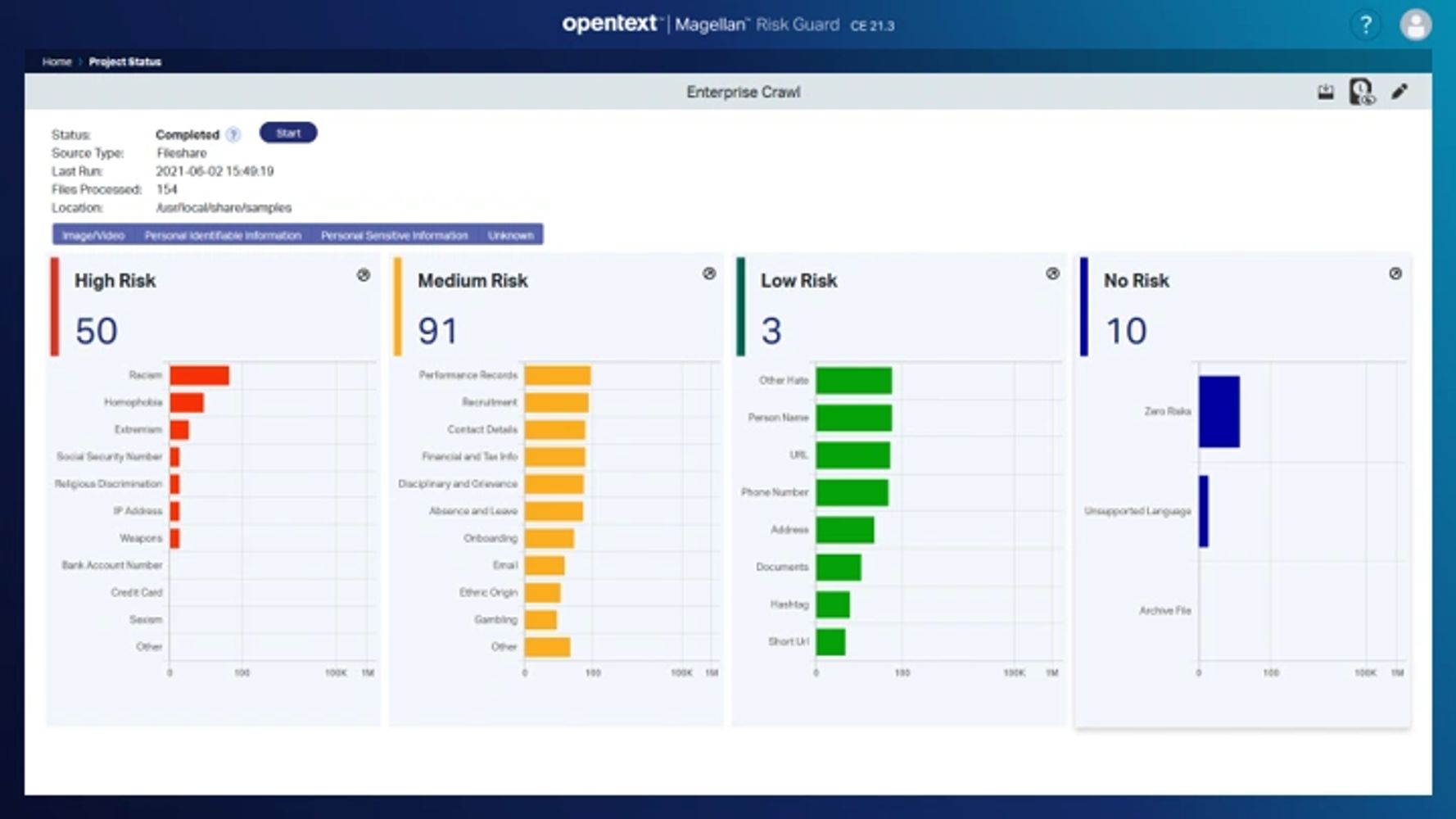

Scan your file shares and content repositories for Personally Identifiable Information (PII) with Nature Language Processing (NLP) and Understanding (NLU). Identifying what documents contain PII, their level of risk, and where they are located helps you to secure those documents before they become a liability and cost you heavy fines and legal fees.

Scan image files and videos for inappropriate imagery such as drugs, violence, and pornography using computer vision. Finding inappropriate images on your network shares will help you reduce the chance of reputational risk that can cause you to lose clients.

The easy to use interface is designed for a non-technical person to setup scans and the level of risk for each category. It also lets you decide to move, copy, delete or ignore the files individually or in batch.

Employing Natural Language Processing, Natural Language Understanding, and Computer Vision it can identify over 50 types of risk, Personally Identifiable Information, and Sensitive Personally Identifiable Information. The configurable risk assessment algorithm lets you adjust the threat level to your organization's tolerance at the level of the scan location. Finding PII in an unsecure file share is high risk compared to finding it in a secure content repository.

Remediate the risky content you found by moving it to a secure repository or delete it. You can review each file to see what risky content was found in it. The Search and Filter functionality permits you to find content with specific risks, review their content and take action in batches.

You can export details of what was found and the action taken for your reporting and auditing needs.

Although many companies have policies and procedures in place to comply with GDPR, CCPA, etc., there may be inadvertant violations occurring within your daily operations. For example, a document with PII maybe in a secure location, but a user may have saved a copy to a file share that others have access to. Finding and eliminating these risks can mitigate fines, and possibly reduce the risk of the information getting out if a hacker gets in.

Much of the hard work of building and training AI has been done. With over 10 years of experience providing Natural Language Processing capabilities, our computational linguists have built a robust set of models to meet your organization's needs to find PII and inappropriate content. You don't need to hire a team of highly technical, high priced resources to build a solution and do the hard work of training and testing models.

The interface is designed for a non-technical person to setup, configure and use. Most AI methods in this space are designed for a data scientist or other highly technical person. Our interface is designed for anyone to be able to fully utilize the capabilities it provides. This allows for departmental level usage without the need for IT to be involved. Filtering and searching capabilities make it easy to narrow in on the type of content of interest and see the found risks and the file's location.

Finding content that puts your organization at risk is hard enough, how to deal with it is also challenging. The easy to use interface allows a non technical person to set a disposition on the content, permitting them to have content moved, copied, deleted or flagged to ignore.

Many AI solutions require sending your sensitive content to a cloud service, potentially exposing your organization's secrets. Our flexible deployment model lets you install it where you want with no need to access anything outside your firewalls.

The ability to report on what was found and how it was dispositioned is vital to keeping your audits in check. Knowing that dark content lurking on your file shares has been remediated will allow your organization to focus on the business it is in.

Copyright © 2021 OpenText™ Magellan™ Risk Guard - All Rights Reserved.